I propose an extension of the “Speech-to-Text” AI services typically available for (e.g.) human language translation, but for horses.

Horse owners tend to know what their animals tell them, but there is no accepted “universal language” that humans are able to reliably interpret.

There may be different phases of the project, described briefly below as an example:

- Data Harvesting: a User connects to the Market via their portable device and completes a profile of secure data that identifies them and their animals – age, breed, condition, temperament etc. Data could be past recordings, or real-time input “on demand” (similar to Smart Speaker tech). It could be a free service to the User whilst the AI Corpus is being created

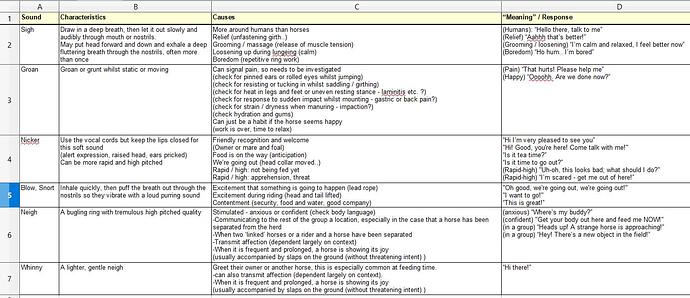

- Data Analysis: the characteristics, causes and responses for “generic sounds” are detailed on the attached pic (“004_Default Horse Sounds.jpg”). More data enables the differentiation of breeds and individual horses in different context

- As the Corpus and quality of analysis improves, Users are encouraged to rate feedback by the AI service on the accuracy – this provides a virtuous circle of improvement

- Once the quality level reaches a certain standard, then an app that is initially entertaining becomes beneficial in the longer term, contributing to the health and welfare of the animals.