Researchers in U of T Engineering have designed a ‘privacy filter’ that disrupts facial recognition algorithms. The system relies on two AI-created algorithms: one performing continuous face detection, and another designed to disrupt the first.

Abstract

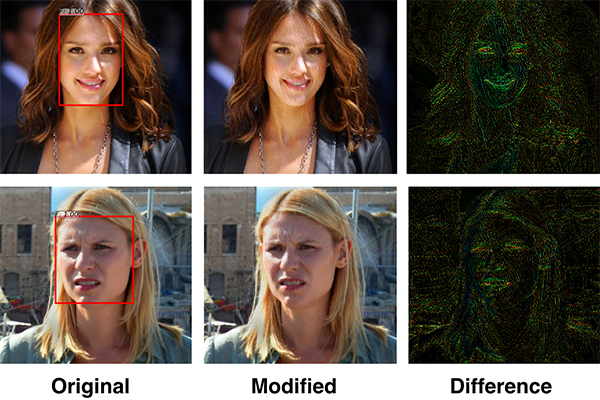

Adversarial attacks involve adding, small, often imperceptible, perturbations to inputs with the goal of getting a machine learning model to misclassifying them. While many different adversarial attack strategies have been proposed on image classification models, object detection pipelines have been much harder to break. In this paper, we propose a novel strategy to craft adversarial examples by solving a constrained optimization problem using an adversarial generator network. Our approach is fast and scalable, requiring only a forward pass through our trained generator network to craft an adversarial sample. Unlike in many attack strategies we show that the same trained generator is capable of attacking new images without explicitly optimizing on them. We evaluate our attack on a trained Faster R-CNN face detector on the cropped 300-W face dataset where we manage to reduce the number of detected faces to 0.5% of all originally detected faces. In a different experiment, also on 300-W, we demonstrate the robustness of our attack to a JPEG compression based defense typical JPEG compression level of 75% reduces the effectiveness of our attack from only 0.5% of detected faces to a modest 5.0%.

Article

Research Paper

https://joeybose.github.io/assets/adversarial-attacks-face.pdf