![]()

Looks great! I love the level of enthusiasm and commitment.

How can this project become part of SingularityNET?

I’m mainly here to follow and support SingularityNET, so to be honest, I’d not really considered that question.

Though I’m sure there is a market for a generally intelligent agent; and it will be interesting to see if I can eventually find a niche.

If you manage to make it work you will be the king

You should absolutely put your neuromorphic AI agent onto singularitynet so that it could benefit others and makes returns on the services it carries out.

Let it take part in the AI economy.

I draw the line at simulating compression waves hitting hairs in the inner ear, hence the audio module. The AGI was having problems recognising similar words, the problem (besides a really crappy microphone) was the key frequency definition within the audio module. I’ve implemented a Blackman - Harris windowed filter and custom noise suppression to help enhance the key frequencies of speech.

The spectrogram now looks a lot cleaner with well defined power bands and clean gaps.

Short video showing the clarity of the new audio input module at work.

This test connectome is only a few hours old and has been exposed to various audio sources including spoken language (and me singing to Pink Floyd lol), and it has learned to recognise/ categorise the base English phoneme set and the usual/ common order of phonemes in word construction/ language… from scratch.

For this test the connectome is recognising the spoken numbers 1-9 (although could be any words), the progression of the graph (lower left) shows each number being cleanly recognised by the connectomes attention networks.

The information for the graph is gathered from cortical areas other than the audio cortex that learn to specialise in attention, they learn the similarities in the spatiotemporally compressed audio/ visual/ tactile sensory streams within the GTP and are concentrated in clusters along the lobes apex These areas are key to the connectomes ‘imagination’ and represent how the words are perceived in its own mental model of reality.

You can also see the model has no ocular stream input so the plasticity of the learning model has utilized the visual cortex area to enhance audio recognition.

Each spoken word is constructed from approx 500X50 (0-9) = 25,000 data points, or 500 X fifty digit numbers, and is recognised from amongst all possible combinations (which is a lot) within 5ms.

I’m testing the memory limits of just the cortex without the supplemental structures, hypothalamus, hippocampus, etc, this is a continuation of understanding Savant syndrome.

This model is using 5.5K neurons (9 types) 30K synapse (13 types) to accurately recall the 80 categories for the 400,000 (80X10X500 or 5000 per category) items/ patterns learned. My calculated theoretical limit for 5.5K neurons is 4 million facets/ memory engram’s. Paradoxically the more complex the data/ pattern the easier it is to retrieve.

The gaps between the sensory input patterns are to prevent the connectome from learning and predicting the order of the patterns; it gives the GTP time to settle before each input and to experience them individually. Although I’ve tried to make the test patterns as individual as possible you might notice that for certain patterns a second spike appears, this is a similarity between the patterns, two words that rhyme, for example.

From 0:35 you can see each cortex region responding to the pattern facet is has learned donated by the matching colours both on the graph and cortex model as its drawn.

The reason I’m testing on just 5.5K neurons is because the full model comprises millions of neurons, which can store trillions of engram’s… and I have limited testing time.

This looks like an interesting project, I saw on your Youtube channel that you also work with vision - an area I am currently working in. I would be glad if you could elaborate on how you process visual stimuli, particularly how do you handle input - do you divide it into patches and process through saccadic movements? Can you represent the image as a waveform (maybe assuming that you can treat an image as a still video)?

Also more generally, do you think that our current understanding of the brain is enough to reproduce it?

Hi ntoxeg

The system/ model is a biological/ chemical (neuromorphic) simulation of the whole human/ mammalian nervous system designed for researching/ replicating intelligence, self awareness and consciousness.

I see no point in drawing bounding boxes around objects or image segmentation, etc. These are schemas trying to recreate on a binary machine what the human brain does naturally; I’m interested in how our brain recognises reality.

I’m trying to stick as closely as I can to the human schema so the visual module for example, replicates the retina, center surround rods/ cones, ganglion, optic nerve, chiasm, tract, LGN, etc. I do this so the visual sensory stream arriving at the visual cortex (V1) in the connectome model is ‘human compatible’, or as close as I can get it.

Do you divide it into patches and process through saccadic movements?

The connectome (V1) learns to recognise gradients, lines, angles, etc from the visual streams, so yes specific neurons/ patches of cortex do become sensitive to specific stimulus. We have evolved as predators, the retina doesn’t produce an image like a camera; it responds mainly to difference/ change over time. Micro saccadic movements are not random, they synchronise the optical stream with the connectome (V1).

In this short video the connectome is not recognising the images per se; the spikes on the graph represent the concept/ perception of the image in the machines distributed networks/ ‘imagination’, combined with the audio/ spoken description, order of introduction, etc. Speaking the associated word elicits the same response; the concepts/ memories exist within the structure of connectome, they were learned through experience and are ‘triggered’ by the visual stimulus.

Do you think that our current understanding of the brain is enough to reproduce it?

Good question, which I can only answer from a personal research perspective. That’s the whole point of my project, to discover the underlying mechanisms and reproduce them in a machine… I’d be wasting my time if I didn’t consider/ know the answer to be yes.

![]()

Every now and again I like to take a break from teaching/ designing my AGI’s and consider human frailties, and check if my design can simulate the symptoms, and/ or give any insights into the prognosis/ diagnosis or cure.

I have a list, roughly ordered by complexity and today it’s the turn of terminal or paradoxical lucidity (PL). PL is one of natures cruellest tricks, approx 75% of patients with long term dementia will fully/ partially become conscious/ lucid shortly before they die. It’s a very complex diagnosis that ties into many other conditions and I’m greatly over simplifying the topic for the purpose of explanation.

https://www.sciencedirect.com/science/article/pii/S1552526019300950

Considering the phenomena in its simplest terms obviously begs the question of how this can happen/ function. It seems intuitive that for normal (ish) function to return the symptoms of dementia cannot be caused by permanent damage/ change, or that something like a build up of amyloid plaque is ultimately responsible, but something is impeding consciousness, so what could it be.

Keep in mind I have already done this for a myriad of conditions and phenomena, so I have insight into how my model behaves/ functions. I’ve replicated optical/ audio illusions, pareidolia, schizophrenia, hallucinations, hypnotism, meditation (states of mind), epilepsy, anaesthesia, NDE, and many more, all with in the same model.

Firstly I read as much empirical information about the subject as possible. Then formulate a theory of how those symptoms could arise and manifest within my model. I then alter the models balances and test, repeat until I get the desired results, making notes all the way.

Within my model memory consolidation is extremely sensitive to the base frequencies of the Global Thought Pattern (GTP). The high dimensional facets of memories are encoded/ indexed by the state of the GTP performing the task at hand.

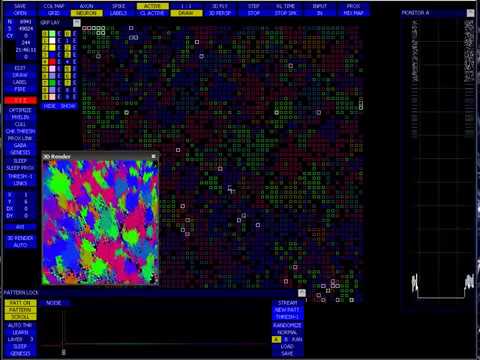

This shows a small section (1.2mm², 0.01%, 10K neurons, 200K synapse) of cerebral cortex from my model, I use it for testing hypotheses and it encompasses all the functionality of the full model. It’s learned 40K memory engrams segmented into 80 pattern concepts along with a regular base GTP rhythm. The graph (lower left) function is equivalent to real-time colour coded Golgi staining, and shows the confidence the model has in recognising the current pattern, shown by the scrolling bar. Notice the actual pattern stream/ matrix on the upper right along with the injected regular GTP rhythm just below. On the first pass it shows a very high confidence in recognising the all patterns, both the episodic sequence memories and the memories regarding the pattern structure are being recalled/ accessed. On the second pass I change the base frequency of just the GTP, notice how the memory retrieval/ recognition becomes sporadic. On the third pass I cut the GTP and the confidence totally drops even though the 80 patterns are still being injected. I then re-establish the GTP and normal operation resumes. This shows how reliant/ sensitive the system is to the state of the underlying base GTP frequencies.

The slow onset of dementia hints at the second pass, it’s not like the global GTP disruption caused by anaesthetic, so I don’t think it’s an imbalance in the neurotransmitter levels/ medium. It must also be affecting the well established networks with diminished plasticity; otherwise the brain would just adapt to the disruptions and wouldn’t then be able to exhibit the PL phenomena.

So one cause of dementia could be an alteration of the base frequencies within the GTP, and the PL phenomena could mean that whatever is causing the phase change is related to a condition that rises or reduces/ diminishes just before death. Allowing the GTP to phase back through its normal frequency domain and thus allowing consciousness to temporarily return. My current main candidate is intracranial pressure, as altering the shape of the connectome can also have adverse effects on the phase of the GTP, further pondering is required.

This is very nice!

Thanks I’m pleased you like it, yes the goal is to approximate the human brain and then improve it.

I do read your posts, interesting work, and it’s good to see your getting to grips with Python.

![]()

I’m a few posts/ updates behind so…

![]()

Frontal cortex development… management and beyond!

(we know a good planet that may benefit from that)

Great work sir…