I made a Reddit post on my work:

https://www.reddit.com/r/agi/comments/gmmrbr/a_natural_and_explainable_brain_my_design/

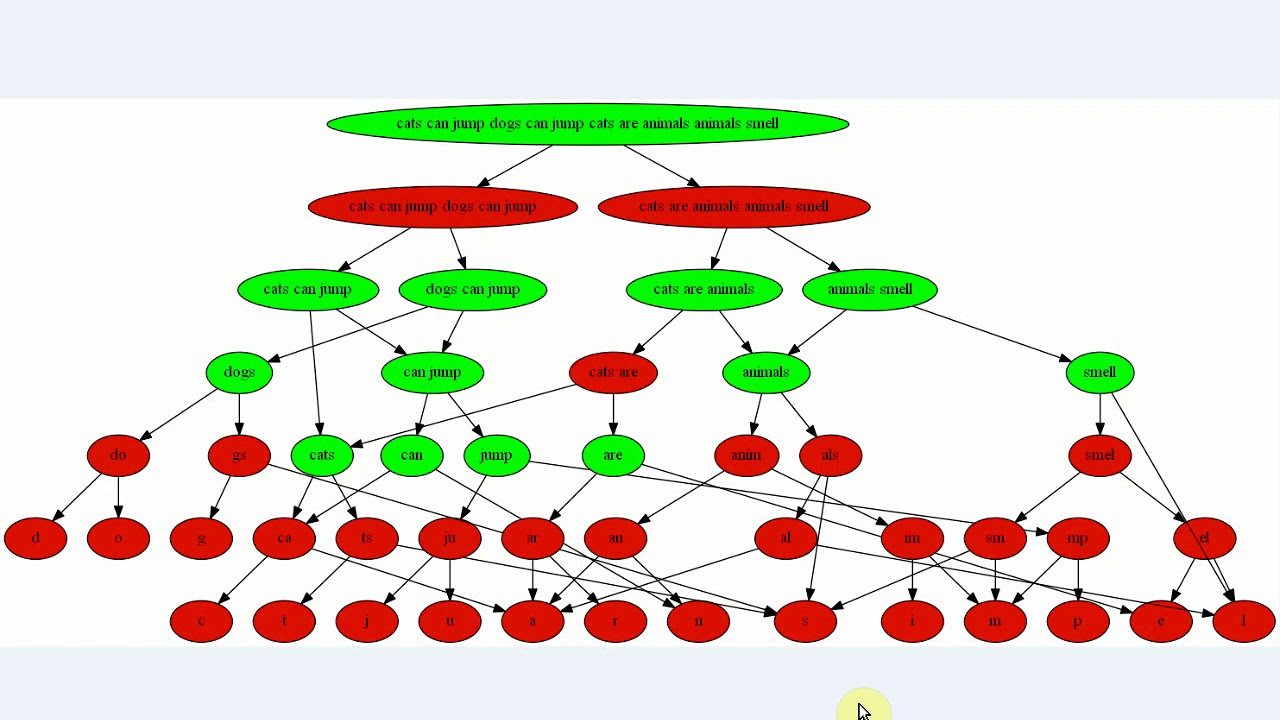

And I made a new video:

Why don’t others explain GPT-2 or Transformers like this? Why all the hard blackbox algerbra?

The world record compression of enwik8 (100MB, mostly text) is 14.8MB. Compression is better than Perplexity for Evaluation. GPT-2, by looking at openAI’s own benchmark, [could] compress enwik8 to about maybe 12MB. My compressor explained in the video is fully understood and gets a score of 21.8MB. It has already been shown in algorithms in the Hutter Prize that grouping words mom/father help compression a Great amount and so does boosting recently activated letters or better yet words if they haven’t tried so (similar words are likely to occur again soon), which I have yet to add to my letter predictor. So I well understand how to get a score of about 16MB at least. I think that puts a lot of point into my points.

Another thing my current code doesn’t do yet is robust exact matching for typos, and time delay similarity of positions, so “hor5e the” should partially activate “that zebra” because horse = zebra some amount and has similar position in order, convolutionarily heard up to higher layers (by using time delay “windows”).