✁Tldr✁:

My “Supersymmetric Artificial Neural Network” model seeks to generate a more sophisticated/better performing “thing labeler”. The better your machine learning model is, the better you can label things.

✐Slightly Long version✐:

- Machine learning concerns as a common case, trying to do things like image classification, or as my linkedin connection the Chief Decision Scientist at Google mentions; “Machine learning is a thing-labeler, essentially.”

▷▷▷▷ For example, in the case of self-driving cars, a convolutional neural network software in the car chip can distinguish between complex objects in the world, like people or other cars. People are complex things, with many features. Old machine learning models like simple Perceptrons could distinguish between far simpler things like simple numbers, but not complex objects.

▽

- In the earlier days of machine learning, models like the simplest Perceptron like software, did not have the capacity to distinguish between complex objects in the world, (Even if modern computing power existed back then, things like simple perceptrons would still be limited by the structure of said simple perceptron)

▽ ▼

3. Item [2] above happens, because the machine learning software like old simple Perceptrons and modern Convolutional Artificial Neural Networks, both use numbers and operations (such as multiplication etc) on those numbers, to represent whatever thing they are being used to classify, and crucially, Perceptrons used numbers and operations on those numbers, that were not sophisticated enough to “learn” or capture complicated things like cars, and cats.

▷▷▷▷ a. Machine learning works similar to children. At first they are terrible at identifying objects aka labeling things in the world, so they do labeling with high error rates.

▷▷▷▷ b. Parents can guide them, by saying this is a cat, and this is a dog in the child’s formative years.

▷▷▷▷ c. Each time the child learns, the child gets less worse at labeling things, and the error gets smaller and smaller, i.e the child’s predictions get closer to ground truth, and the child’s neurons change over time to reflect this error minimization.

▷▷▷▷ d. In the case of classifying a 10x10 picture for example, 10x10=100 pixels will be passed to the machine learning model, which has somewhere to store what the model learns in the form of numbers. i.e. somewhere like a child’s brain, however instead in the form of numbers and mathematical operations on those numbers. ( Biological brains can be seen as events or operations happening on some neurons which look like objects or numbers.)

▷▷▷▷ e. Similar to how biological brains change or “re-wire” each time to reflect changes in learning performance, machine learning models change their numbers by operations like multiplication.

▷▷►► f. The type of numbers that exist in the machine learning model, or what type of operations occur on those numbers in that model, determines how well the model can do particular tasks, and if the model can do particular tasks altogether. As an example, bird brains change in a different way than human brains, when learning occurs. The brain types here and their events, can be alluded to machine learning models’ numbers or storage, and their operations.

△ ▲

![]() [b]An evolution of models, from old to mine**

[b]An evolution of models, from old to mine**![]()

When neural networks work, their weights are altered. Depending on the architecture, their weights will fall into various types of spaces, and those spaces can be observed in the variety of groups available from group theory. Different group usage/neural network architectures deliver different representation capacity wrt the input things the learning models are trying to label.

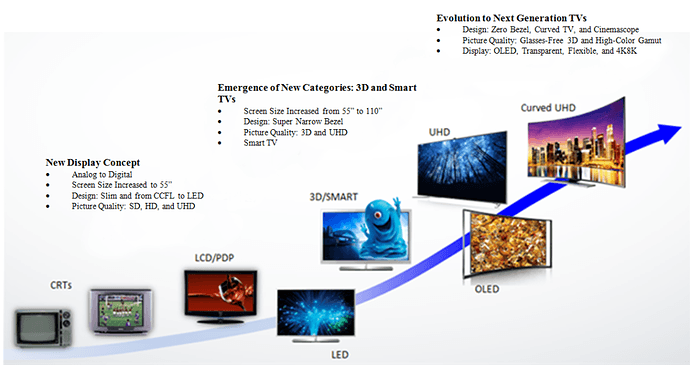

a.] Think about a series of television screens since televisions began to be.

b.] Each new generation of televisions had more and more representation capacity. Imagine the number of pixels or tv structure to be a type of group theory matrix. Imagine the number and grade of configurations of expressible pictures that each new generation of television could muster, as some distinct space pertaining to each group theory matrix.

c.] For example, O(N) family of structures are not ‘connected’ enough. The usage of SO(N) instead concern connected structures, thus enabling gradient descent. The use of SU(N) models deliver even more connectivity i.e. things like matrices being invertible after operations on matrices. I estimate that SU(M|N) aka Supersymmetry based matrices or super lie group based matrices (as seen in my “Supersymmetric Artificial Neural Network”) is an even richer way to represent inputs, compared to current Deep learning strategies, possessing features of SU(N) and beyond.

![]() **Somewhat new?**

**Somewhat new?**![]()

Notably, my Supersymmetric Artificial Neural Network is somewhat new, since it still concerns artificial neural networks, although a separate type.

Think about the pseudocode behind the modern Generative Adversarial Neural Network, or another separate older type of model, the Convolutional neural network, or yet again a much older simple Perceptron. All are types of neural networks, but they each represent quite different genera of models altogether. Likewise, Convolutional Neural Networks were somewhat new in their emergence, but not entirely so. After that, Generative Adversarial Neural Networks were somewhat new in their arrival, but not entirely so. This is the general path of progress in machine learning.

As new novel neural network types emerged in time, more cognitive tasks could be approximated by machine learning models, such as self driving car models, or automated disease diagnosis models. As such, my “Supersymmetric Artificial Neural Network” model seeks to contribute to further non-trivial progress, again as a separate genera of artificial neural networks altogether.

![]() Conclusion

Conclusion![]()

So, machine learning has seen a progression of ways to store information about the things they can label, seen in the types of numbers they use, in relation to the operations that they perform on those numbers.

Time has yielded usage from simple integers, to continuous decimals, to special orthogonal matrices, to unitary matrices, to special unitary matrices involving complex numbers in machine learning.

☆☆☆☆☆☆ State of the art machine learning today, except my model, concern using numbers like complex numbers, and their operations, to label things. My model additionally concerns the use of supersymmetric numbers and their operations, which are observed in string theory to contain more information holding capacity.

![]()

![]()

![]() Being a thing labeler may sound boring and unimportant, but humans do a lot of thing labeling overall, like labeling a facial expression, heart irregularity in a patient, pedestrians on a road while driving, the enterprise of science used to label the universe, etc…

Being a thing labeler may sound boring and unimportant, but humans do a lot of thing labeling overall, like labeling a facial expression, heart irregularity in a patient, pedestrians on a road while driving, the enterprise of science used to label the universe, etc…